Lethal Autonomous Weapons Systems: A Bad Idea?

Defence Minister Nirmala Sitharaman inaugurated on Monday the ‘Stakeholders’ Workshop on Artificial Intelligence in National Security and Defence, Listing of Use Cases’. The stakeholders representing the government included officials from the DRDO, Department of Defence (R&D), as well as other officials from the Defence Ministry. The multi-stakeholders taskforce constituted by the Defence Minister apart from the government departments, according to PIB, include members from academia, industry professionals, and start-ups. The taskforce, chaired by C. Chandrasekharan, Chairman of Tata Sons, had met in February and April this year.

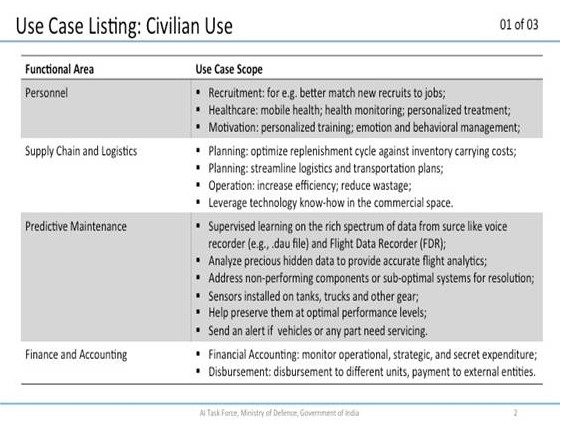

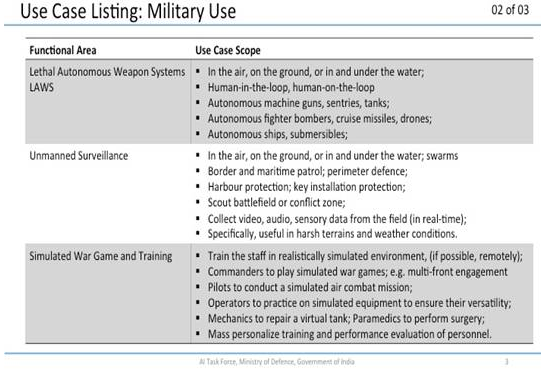

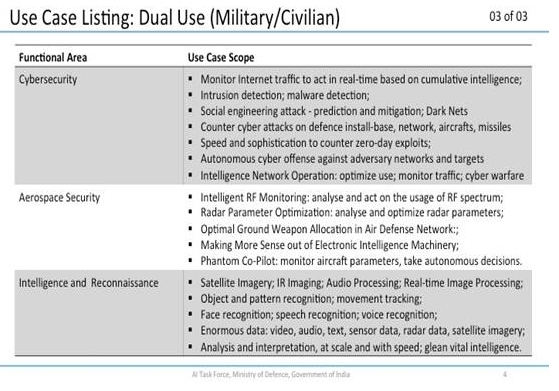

The previous meetings dealt with artificial intelligence (AI) behaviour and how to use the data generated by AI for developing applications. The data generated would be subject to being analysed by machine learning and deep learning algorithms. The last meeting concluded with the stakeholders deciding to conduct a workshop where 'use cases' would be listed. The present workshop listed three categories of 'use cases': civilian, military and military/civilian – as shown below.

The problems here arise initially with the reliance on deep learning, particularly, for military application. Deep learning forms a subset of machine learning, which itself is a subset of the larger artificial intelligence (AI) umbrella. Machine learning involves the algorithm to repeat tasks until it learns to perform them perfectly; deep learning too uses this method. However, in machine learning, for example, an algorithm is trained to recognise certain types of information such as your email service filtering out spam. The problem is that machine learning still requires an amount of human input – sometimes important emails land up in the spam folder and vice versa. Deep learning, on the other hand, is able to more accurately determine what spam is and what is not based on its ability to make decisions autonomously. As Prabir Pukayastha of the Delhi Science Forum put it, “Deep learning involves making rules where none exist,” meaning that in the absence of specific instructions, the algorithm frames new rules to complete its task.

The problem with using deep learning AI in the internet of things as well as for defence or military purposes is having to deal with the ethical questions that arise. Too much would depend on the algorithm's autonomous decision-making abilities. For example, if a fully autonomous transport vehicle is faced with an inevitable crash, what would the vehicle prioritise? The lives of those outside the vehicle? Or the occupants of the vehicle?

In military applications concerning Lethal Autonomous Weapons Systems (LAWS), the stakes are potentially higher. Would a machine be able to accurately distinguish between combatant and a non-combatant? Would a machine be able to distinguish between an enemy and an ally? Even if the machine is able to perform these recognition tasks, what is the margin of error? For example, in the case of Aadhaar, a 5 per cent error rate in identification of over 120 crore registered users, results in quite a high number of people being denied entitlements.

In 2016, the Fifth Review Conference of the High Contracting Parties to the Convention on Certain Conventional Weapons (CCW) constituted a Group of Governmental Experts (GGE) on emerging technologies in the area of LAWS. The focus was on its application for military purposes. On December 22 last year, the GGE presented its final report. The group's conclusions were that the current framework under the CCW is broad enough to accommodate LAWS. However, they mentioned that a balance must be struck in keeping options open for civilian use and regulating military use. They mentioned that all humanitarian laws would continue to apply. The GGE stated that they would meet again for 10 days in 2018 to work out the details.

The fact that the GGE has not outright condemned LAWS should be worrisome, particularly, as the CCW is ambiguous on the issue of internal conflict. Clause 3 binds parties in an internal conflict to adhere to its obligations. However, clause 4 bars invoking the Convention or its Protocols where a State is legitimately attempting to maintain or re-establish law and order, or to defend its national unity and territorial integrity. Therefore, it would not be unthinkable that new AI-driven weapons systems would be used in counter-insurgency operations. On the one hand, a fully autonomous system could devastate combatants and non-combatants alike. On the other hand, considering the Indian State's record on 'encounters', a semi-autonomous system is equally worrisome.

The next issue arises in a conflict between States. At present, there are several known nuclear armed States. The numbers are likely to increase as more States subscribe to a deterrent theory of security. The question here becomes one of haves and have-nots. In a conflict between a State possessing LAWS and a State without any LAWS, but possessing nukes, where would the threshold lie? Would a ‘no first use policy’ still apply?

At present, only one of India's neighbours is actively involved in AI development. China's stated position on LAWS is that they are all for an outright ban. However, in the absence of an internationally accepted legal definition of LAWS, it may be too early to rejoice. China's own definition of LAWS is wide enough to the extent that it is limited to those systems which are lethal and completely autonomous of any human agency. India's other neighbour – which the security establishment is preoccupied with – also has supported a ban on LAWS. Under these circumstances, India does not seem to have any compelling reason to develop LAWS, unless these are aimed towards the internal conflicts in the country.

Get the latest reports & analysis with people's perspective on Protests, movements & deep analytical videos, discussions of the current affairs in your Telegram app. Subscribe to NewsClick's Telegram channel & get Real-Time updates on stories, as they get published on our website.